keras h5模型转化tensorflow serving模型脚本和restful client访问脚本

h5_2_pd#!/usr/bin/env python# -*- coding: utf-8 -*-# @Date: 2019-07-26 17:19:07# @Author:# @Email: None# @Version : py3from keras.models import load_modelimport tensorflow as tfimp...

·

部署模型、开启服务的方法,见此处。

一、

h5_2_pd.py这是将h5模型转化为saved模型的脚本。

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @Date : 2019-07-26 17:19:07

# @Author :

# @Email : None

# @Version : py3

from keras.models import load_model

import tensorflow as tf

import os

from os import *

import os.path as osp

from keras import backend as K

import tensorflow.keras.backend as K

from tensorflow.keras.losses import categorical_crossentropy

from tensorflow.keras.optimizers import Adadelta

# from mymodel import captcha_model as model

def export_model(model,

export_model_dir,

model_version

):

"""

:param export_model_dir: type string, save dir for exported model

:param model_version: type int best

:return:no return

"""

with tf.get_default_graph().as_default():

# prediction_signature

tensor_info_input = tf.saved_model.utils.build_tensor_info(model.input)

tensor_info_input = tf.saved_model.utils.build_tensor_info(model.output)

prediction_signature = (

tf.saved_model.signature_def_utils.build_signature_def(

inputs={'images': tensor_info_input}, # Tensorflow.TensorInfo

outputs={'result': tensor_info_input},

method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME))

print('step1 => prediction_signature created successfully')

# set-up a builder

export_path_base = export_model_dir

export_path = os.path.join(

tf.compat.as_bytes(export_path_base),

tf.compat.as_bytes(str(model_version)))

builder = tf.saved_model.builder.SavedModelBuilder(export_path)

builder.add_meta_graph_and_variables(

# tags:SERVING,TRAINING,EVAL,GPU,TPU

sess=K.get_session(),

tags=[tf.saved_model.tag_constants.SERVING],

signature_def_map={'prediction_signature': prediction_signature,},

)

print('step2 => Export path(%s) ready to export trained model' % export_path, '\n starting to export model...')

builder.save(as_text=True)

print('Done exporting!')

def export_model2(model,

export_model_dir,

model_version

):

"""

:param export_model_dir: type string, save dir for exported model url

:param model_version: type int best

:return:no return

"""

with tf.get_default_graph().as_default():

# prediction_signature

tensor_info_input = tf.saved_model.utils.build_tensor_info(model.input)

tensor_info_output = tf.saved_model.utils.build_tensor_info(model.output)

print(model.output.shape, '**', tensor_info_output)

prediction_signature = (

tf.saved_model.signature_def_utils.build_signature_def(

inputs={'images': tensor_info_input}, # Tensorflow.TensorInfo

outputs={'result': tensor_info_output},

#method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME)

method_name= "tensorflow/serving/predict")

)

print('step1 => prediction_signature created successfully')

# set-up a builder

mkdir(export_model_dir)

export_path_base = export_model_dir

export_path = os.path.join(

tf.compat.as_bytes(export_path_base),

tf.compat.as_bytes(str(model_version)))

builder = tf.saved_model.builder.SavedModelBuilder(export_path)

builder.add_meta_graph_and_variables(

# tags:SERVING,TRAINING,EVAL,GPU,TPU

sess=K.get_session(),

tags=[tf.saved_model.tag_constants.SERVING],

signature_def_map={

'predict':

prediction_signature,

tf.saved_model.signature_constants.DEFAULT_SERVING_SIGNATURE_DEF_KEY:

prediction_signature,

},

)

print('step2 => Export path(%s) ready to export trained model' % export_path, '\n starting to export model...')

#builder.save(as_text=True)

builder.save()

print('Done exporting!')

if __name__ == '__main__':

# 指定路径和模型版本号

model = tf.keras.models.load_model('..../model.h5')

export_model2(

model,

'./export_modeltest',

1

)

二、

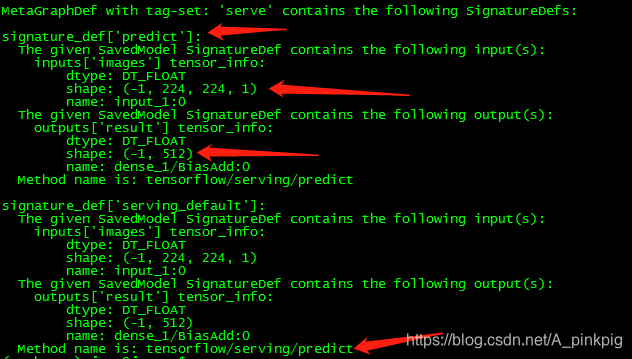

client脚本。这是对模型进行restful API访问的脚本。个人觉得这里面的关键点是搞清楚你的模型输入输出数据格式。所谓输出就是神经网络的input tendor的形状、数据格式。输出就是神经网络输出的数据格式。可以通过tensorflow的命令saved_model_cli 查看,使用格式如下,这个命令要在python环境安装tensorflow下才能使用。

saved_model_cli show --dir ...../resnet/1 --all

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# @Date : 2019-07-26 17:19:07

# @Author :

# @Email : None

# @Version : py3

from __future__ import print_function

import base64

import requests

import cv2

import numpy as np

import json

import pandas as pd

# The server URL specifies the endpoint of your server running the ResNet

# model with the name "resnet" and using the predict interface.

SERVER_URL = 'http://localhost:8501/v1/models/resnet:predict'

# get_inputs函数处理模型预测数据,要和训练模型时的数据预处过程相同

def get_inputs(src=[]):

pre_x = []

for s in src:

input = cv2.imread(s)

input = cv2.resize(input, (224, 224))

input = cv2.cvtColor(input, cv2.COLOR_BGR2GRAY)

pre_x.append(input) # input一张图片

pre_x = np.asarray(pre_x) / 255.0

return pre_x

class NumpyEncoder(json.JSONEncoder):

def default(self, obj):

if isinstance(obj, np.ndarray):

return obj.tolist()

return json.JSONEncoder.default(self, obj)

def main():

# Compose a JSON Predict request (send JPG image in base64).

#jpg_bytes = base64.b64encode(dl_request.content).decode('utf-8')

#predict_request = '{"instances" : [%s]}' % (jpeg_bytes)

images = [imglist]

pre_x = get_inputs(images)

pre_x = np.reshape(pre_x, ( pre_x.shape[1], pre_x.shape[2], 1))

input_data = np.expand_dims(pre_x,axis =0)

# change the format of input data suit the model

p = {'inputs': input_data}

predict_request = {"instances":p}

# to json

param = json.dumps(p, cls=NumpyEncoder)

# Send few requests to warm-up the model.

for _ in range(3):

response=requests.post(SERVER_URL, data=param)

response.raise_for_status()

# Send few actual requests

response = requests.post(SERVER_URL, data=param)

response.raise_for_status()

prediction = response.json()['outputs'][0]

prediction = np.array(prediction)

# 输出最终预测结果,就是得分最高的类别

pre_result_index = np.argsort(-prediction)[0]

if __name__ == '__main__':

main()

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)