第十九周总结(A3C、Lingo、贝塞尔)

第十九周的回顾序言python贝塞尔曲线深度强化学习A2CA3CLingo序言这一周都没有算leetcode,也没有记录博客,所有的精力都放在了论文回稿上总结一下这一周的所学python学习了很久的java,回头重新看python,发现python真的是随意的很,没有强制定义类,可以面向对象,可以面向过程贝塞尔曲线关于有序散点轨迹的拟合python神经网络贝塞尔曲线深度强化学习A2CA3CLing

序言

这一周都没有算leetcode,也没有记录博客,所有的精力都放在了论文回稿上

总结一下这一周的所学

python

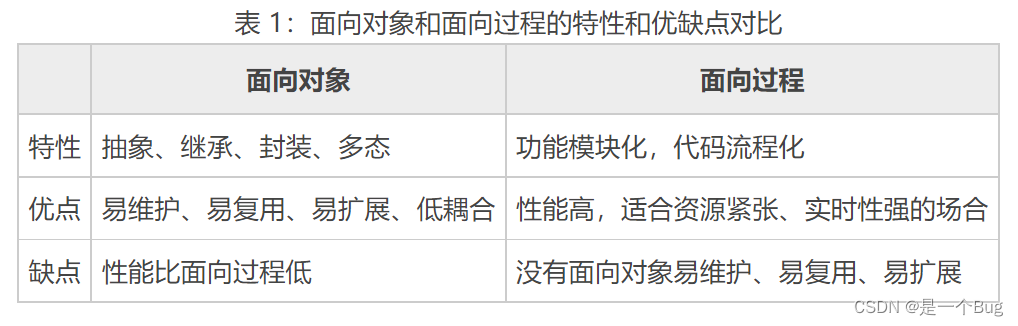

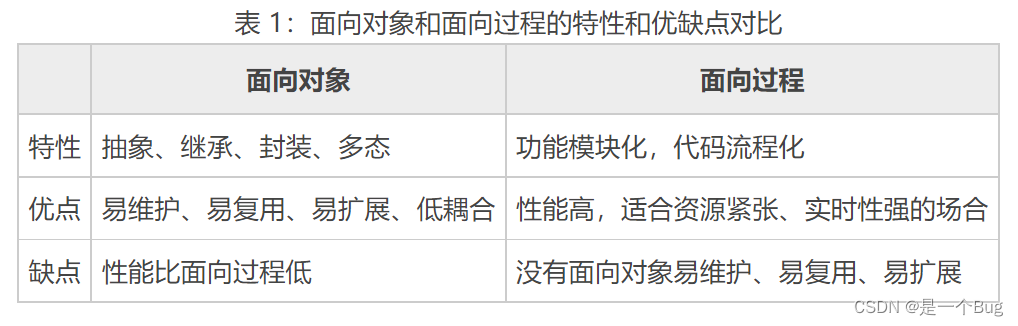

学习了很久的java,回头重新看python,发现python真的是随意的很,没有强制定义类,可以面向对象,可以面向过程

散点拟合曲线

关于有序散点轨迹的拟合

- 贝塞尔曲线是我正在用的,又快又高效,会丢失一定的信息

- 如果需要更精确的点信息,建议dp网络。

- 如果数据是有序的,建议python中的scipy

python-scipy

插值:简单来说,插值就是根据原有数据进行填充,最后生成的曲线一定过原有点。

拟合:拟合是通过原有数据,调整曲线系数,使得曲线与已知点集的差别(最小二乘)最小,最后生成的曲线不一定经过原有点。

# 这里要确定好顺序

import matplotlib.pyplot as plt

import numpy as np

from scipy import interpolate

#设置距离

x =np.array([0, 1, 1.5, 2, 2.5, 3, 3.5, 4, 4.5, 5, 5.5, 6, 6.5, 70, 8, 9,10])

#设置相似度

y =np.array([0.8579087793827057, 0.8079087793827057, 0.7679087793827057, 0.679087793827057,

0.5579087793827057, 0.4579087793827057, 0.3079087793827057, 0.3009087793827057,

0.2579087793827057, 0.2009087793827057, 0.1999087793827057, 0.1579087793827057,

0.0099087793827057, 0.0079087793827057, 0.0069087793827057, 0.0019087793827057,

0.0000087793827057])

#插值法之后的x轴值,表示从0到10间距为0.5的200个数

xnew =np.arange(0,10,0.1)

#实现函数

func = interpolate.interp1d(x,y,kind='cubic')

#利用xnew和func函数生成ynew,xnew数量等于ynew数量

ynew = func(xnew)

# 原始折线

plt.plot(x, y, "r", linewidth=1)

#平滑处理后曲线

plt.plot(xnew,ynew)

#设置x,y轴代表意思

plt.xlabel("The distance between POI and user(km)")

plt.ylabel("probability")

#设置标题

plt.title("The content similarity of different distance")

#设置x,y轴的坐标范围

plt.xlim(0,10,8)

plt.ylim(0,1)

plt.show()

神经网络

补充

贝塞尔曲线

这个是我正在用的,又快又高效,但是如果需要更精确的点信息,建议dp网络。

如果数据是有序的,建议python中的scipy

import matplotlib.pyplot as plt

import numpy as np

import math

state = np.load('S_record_REAL.npy')[155]

state = state[:,0:2]

# episode = 50

x1 = state[:,0]

y1 = state[:,1]

class Bezier:

# 输入控制点,Points是一个array,num是控制点间的插补个数

def __init__(self,Points,InterpolationNum):

self.demension=Points.shape[1] # 点的维度

self.order=Points.shape[0]-1 # 贝塞尔阶数=控制点个数-1

self.num=InterpolationNum # 相邻控制点的插补个数

self.pointsNum=Points.shape[0] # 控制点的个数

self.Points=Points

# 获取Bezeir所有插补点

def getBezierPoints(self,method):

if method==0:

return self.DigitalAlgo()

if method==1:

return self.DeCasteljauAlgo()

# 数值解法

def DigitalAlgo(self):

PB=np.zeros((self.pointsNum,self.demension)) # 求和前各项

pis =[] # 插补点

for u in np.arange(0,1+1/self.num,1/self.num):

for i in range(0,self.pointsNum):

PB[i]=(math.factorial(self.order)/(math.factorial(i)*math.factorial(self.order-i)))*(u**i)*(1-u)**(self.order-i)*self.Points[i]

pi=sum(PB).tolist() #求和得到一个插补点

pis.append(pi)

return np.array(pis)

# 德卡斯特里奥解法

def DeCasteljauAlgo(self):

pis =[] # 插补点

for u in np.arange(0,1+1/self.num,1/self.num):

Att=self.Points

for i in np.arange(0,self.order):

for j in np.arange(0,self.order-i):

Att[j]=(1.0-u)*Att[j]+u*Att[j+1]

pis.append(Att[0].tolist())

return np.array(pis)

class Line:

def __init__(self,Points,InterpolationNum):

self.demension=Points.shape[1] # 点的维数

self.segmentNum=InterpolationNum-1 # 段数

self.num=InterpolationNum # 单段插补(点)数

self.pointsNum=Points.shape[0] # 点的个数

self.Points=Points # 所有点信息

def getLinePoints(self):

# 每一段的插补点

pis=np.array(self.Points[0])

# i是当前段

for i in range(0,self.pointsNum-1):

sp=self.Points[i]

ep=self.Points[i+1]

dp=(ep-sp)/(self.segmentNum)# 当前段每个维度最小位移

for i in range(1,self.num):

pi=sp+i*dp

pis=np.vstack((pis,pi))

return pis

# points=np.array([

# ])

points= state

if points.shape[1]==3:

fig=plt.figure()

ax = fig.gca(projection='3d')

# 标记控制点

for i in range(0,points.shape[0]):

ax.scatter(points[i][0],points[i][1],points[i][2],marker='o',color='r')

ax.text(points[i][0],points[i][1],points[i][2],i,size=12)

# 直线连接控制点

l=Line(points,1000)

pl=l.getLinePoints()

ax.plot3D(pl[:,0],pl[:,1],pl[:,2],color='k')

# 贝塞尔曲线连接控制点

bz=Bezier(points,1000)

matpi=bz.getBezierPoints(0)

ax.plot3D(matpi[:,0],matpi[:,1],matpi[:,2],color='r')

plt.show()

if points.shape[1]==2:

# 标记控制点

# for i in range(0,points.shape[0]):

# plt.scatter(points[i][0],points[i][1],marker='o',color='r')

# plt.text(points[i][0],points[i][1],i,size=12)

# 直线连接控制点

l=Line(points,1000)

pl=l.getLinePoints()

# plt.plot(pl[:,0],pl[:,1],color='k')

# 贝塞尔曲线连接控制点

bz=Bezier(points,1000)

matpi=bz.getBezierPoints(1)

```plt.plot(matpi[:,0],matpi[:,1],color='r')

plt.show()

深度强化学习

A2C

i

mport multiprocessing

import threading

import tensorflow.compat.v1 as tf

import numpy as np

# from NB_NOMA import NOMA

from sea_environment_ppo import SeaOfEnv

import os

import shutil

import matplotlib.pyplot as plt

tf.disable_eager_execution()

import time

#N_WORKERS = multiprocessing.cpu_count()

N_WORKERS = 1

MAX_EP_STEP = 50

MAX_GLOBAL_EP = 1500

GLOBAL_NET_SCOPE = 'Global_Net'

UPDATE_GLOBAL_ITER = 1036

GAMMA = 0.9

ENTROPY_BETA = 0.01

LR_A = 0.0002 # learning rate for actor

LR_C = 0.0002 # learning rate for critic

GLOBAL_RUNNING_R = []

GLOBAL_EP = 0

N_S = 2

N_A = 1

A_BOUND = [-0.68, 0.66]

env = SeaOfEnv(N_S,N_A)

class ACNet(object):

def __init__(self, scope, globalAC=None):

if scope == GLOBAL_NET_SCOPE: # get global network

with tf.variable_scope(scope):

self.s = tf.placeholder(tf.float32, [None, N_S], 'S')

self.a_params, self.c_params = self._build_net(scope)[-2:]

else: # local net, calculate losses

with tf.variable_scope(scope):

self.s = tf.placeholder(tf.float32, [None, N_S], 'S')

self.a_his = tf.placeholder(tf.float32, [None, N_A], 'A')

self.v_target = tf.placeholder(tf.float32, [None, 1], 'Vtarget')

mu, sigma, self.v, self.a_params, self.c_params = self._build_net(scope)

td = tf.subtract(self.v_target, self.v, name='TD_error')

with tf.name_scope('c_loss'):

self.c_loss = tf.reduce_mean(tf.square(td))

with tf.name_scope('wrap_a_out'):

mu, sigma = mu * A_BOUND[1], sigma + 1e-4

normal_dist = tf.distributions.Normal(mu, sigma)

with tf.name_scope('a_loss'):

log_prob = normal_dist.log_prob(self.a_his)

exp_v = log_prob * tf.stop_gradient(td)

entropy = normal_dist.entropy() # encourage exploration

self.exp_v = ENTROPY_BETA * entropy + exp_v

self.a_loss = tf.reduce_mean(-self.exp_v)

with tf.name_scope('choose_a'): # use local params to choose action

self.A = tf.clip_by_value(tf.squeeze(normal_dist.sample(1), axis=[0, 1]), A_BOUND[0], A_BOUND[1])

with tf.name_scope('local_grad'):

self.a_grads = tf.gradients(self.a_loss, self.a_params)

self.c_grads = tf.gradients(self.c_loss, self.c_params)

with tf.name_scope('sync'):

with tf.name_scope('pull'):

self.pull_a_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.a_params, globalAC.a_params)]

self.pull_c_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.c_params, globalAC.c_params)]

with tf.name_scope('push'):

self.update_a_op = OPT_A.apply_gradients(zip(self.a_grads, globalAC.a_params))

self.update_c_op = OPT_C.apply_gradients(zip(self.c_grads, globalAC.c_params))

def _build_net(self, scope):

w_init = tf.random_normal_initializer(0., .1)

with tf.variable_scope('actor'):

l_a = tf.layers.dense(self.s, 128, tf.nn.relu6, kernel_initializer=w_init, name='la')

mu = tf.layers.dense(l_a, N_A, tf.nn.tanh, kernel_initializer=w_init, name='mu')

sigma = tf.layers.dense(l_a, N_A, tf.nn.softplus, kernel_initializer=w_init, name='sigma')

with tf.variable_scope('critic'):

l_c = tf.layers.dense(self.s, 64, tf.nn.relu6, kernel_initializer=w_init, name='lc')

v = tf.layers.dense(l_c, 1, kernel_initializer=w_init, name='v') # state value

a_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope=scope + '/actor')

c_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope=scope + '/critic')

return mu, sigma, v, a_params, c_params

def update_global(self, feed_dict): # run by a local

SESS.run([self.update_a_op, self.update_c_op], feed_dict) # local grads applies to global net

def pull_global(self): # run by a local

SESS.run([self.pull_a_params_op, self.pull_c_params_op])

def choose_action(self, s): # run by a local

s = s[np.newaxis, :]

return SESS.run(self.A, {self.s: s})

class Worker(object):

def __init__(self, name, globalAC):

self.env = SeaOfEnv(N_S,N_A)

self.name = name

self.AC = ACNet(name, globalAC)

def work(self):

global GLOBAL_RUNNING_R, GLOBAL_EP

total_step = 1

a3c_r = []

buffer_s, buffer_a, buffer_r = [], [], []

while not COORD.should_stop() and GLOBAL_EP < MAX_GLOBAL_EP:

s = self.env.reset()

ep_r = 0

for ep_t in range(MAX_EP_STEP):

# if self.name == 'W_0':

# self.env.render()

# s = self.env.reset()

a = self.AC.choose_action(s)

s_, r = self.env.step(a, s)

# print(s)

done = True if ep_t == MAX_EP_STEP - 1 else False

ep_r += r / MAX_EP_STEP

s = s_

total_step += 1

buffer_s.append(s)

buffer_a.append(a)

buffer_r.append(r)

# normalize

if total_step % UPDATE_GLOBAL_ITER == 0 or done: # update global and assign to local net

if done:

v_s_ = 0 # terminal

else:

v_s_ = SESS.run(self.AC.v, {self.AC.s: s_[np.newaxis, :]})[0, 0]

buffer_v_target = []

for r in buffer_r[::-1]: # reverse buffer r

v_s_ = r + GAMMA * v_s_

buffer_v_target.append(v_s_)

buffer_v_target.reverse()

buffer_s, buffer_a, buffer_v_target = np.vstack(buffer_s), np.vstack(buffer_a), np.vstack(buffer_v_target)

feed_dict = {

self.AC.s: buffer_s,

self.AC.a_his: buffer_a,

self.AC.v_target: buffer_v_target,

}

self.AC.update_global(feed_dict)

buffer_s, buffer_a, buffer_r = [], [], []

self.AC.pull_global()

if done:

if len(GLOBAL_RUNNING_R) == 0: # record running episode reward

GLOBAL_RUNNING_R.append(ep_r)

else:

GLOBAL_RUNNING_R.append(0.9 * GLOBAL_RUNNING_R[-1] + 0.1 * ep_r)

print(

# self.name,

# "Ep:", GLOBAL_EP,

# "| Ep_r: %i" % GLOBAL_RUNNING_R[-1],

"%.2f" %GLOBAL_RUNNING_R[-1]

)

GLOBAL_EP += 1

break

if __name__ == "__main__":

SESS = tf.Session()

t1 = time.time()

with tf.device("/cpu:0"):

OPT_A = tf.train.RMSPropOptimizer(LR_A, name='RMSPropA')

OPT_C = tf.train.RMSPropOptimizer(LR_C, name='RMSPropC')

GLOBAL_AC = ACNet(GLOBAL_NET_SCOPE) # we only need its params

workers = []

# Create worker

for i in range(N_WORKERS):

i_name = 'W_%i' % i # worker name

workers.append(Worker(i_name, GLOBAL_AC))

COORD = tf.train.Coordinator()

SESS.run(tf.global_variables_initializer())

worker_threads = []

for worker in workers:

job = lambda: worker.work()

t = threading.Thread(target=job)

t.start()

worker_threads.append(t)

COORD.join(worker_threads)

print('Running time: ', time.time() - t1)

np.save('ppo_r', GLOBAL_RUNNING_R)

plt.plot(np.arange(len(GLOBAL_RUNNING_R)), GLOBAL_RUNNING_R)

plt.xlabel('step')

plt.ylabel('Total moving reward')

plt.show()

A3C

import multiprocessing

import threading

import tensorflow.compat.v1 as tf

import numpy as np

# from NB_NOMA import NOMA

from sea_environment_ppo import SeaOfEnv

import os

import shutil

import matplotlib.pyplot as plt

tf.disable_eager_execution()

import time

#N_WORKERS = multiprocessing.cpu_count()

N_WORKERS = 8

MAX_EP_STEP = 50

MAX_GLOBAL_EP = 1500

GLOBAL_NET_SCOPE = 'Global_Net'

UPDATE_GLOBAL_ITER = 1036

GAMMA = 0.9

ENTROPY_BETA = 0.01

LR_A = 0.0002 # learning rate for actor

LR_C = 0.0002 # learning rate for critic

GLOBAL_RUNNING_R = []

GLOBAL_EP = 0

N_S = 2

N_A = 1

A_BOUND = [-0.68, 0.66]

env = SeaOfEnv(N_S,N_A)

class ACNet(object):

def __init__(self, scope, globalAC=None):

if scope == GLOBAL_NET_SCOPE: # get global network

with tf.variable_scope(scope):

self.s = tf.placeholder(tf.float32, [None, N_S], 'S')

self.a_params, self.c_params = self._build_net(scope)[-2:]

else: # local net, calculate losses

with tf.variable_scope(scope):

self.s = tf.placeholder(tf.float32, [None, N_S], 'S')

self.a_his = tf.placeholder(tf.float32, [None, N_A], 'A')

self.v_target = tf.placeholder(tf.float32, [None, 1], 'Vtarget')

mu, sigma, self.v, self.a_params, self.c_params = self._build_net(scope)

td = tf.subtract(self.v_target, self.v, name='TD_error')

with tf.name_scope('c_loss'):

self.c_loss = tf.reduce_mean(tf.square(td))

with tf.name_scope('wrap_a_out'):

mu, sigma = mu * A_BOUND[1], sigma + 1e-4

normal_dist = tf.distributions.Normal(mu, sigma)

with tf.name_scope('a_loss'):

log_prob = normal_dist.log_prob(self.a_his)

exp_v = log_prob * tf.stop_gradient(td)

entropy = normal_dist.entropy() # encourage exploration

self.exp_v = ENTROPY_BETA * entropy + exp_v

self.a_loss = tf.reduce_mean(-self.exp_v)

with tf.name_scope('choose_a'): # use local params to choose action

self.A = tf.clip_by_value(tf.squeeze(normal_dist.sample(1), axis=[0, 1]), A_BOUND[0], A_BOUND[1])

with tf.name_scope('local_grad'):

self.a_grads = tf.gradients(self.a_loss, self.a_params)

self.c_grads = tf.gradients(self.c_loss, self.c_params)

with tf.name_scope('sync'):

with tf.name_scope('pull'):

self.pull_a_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.a_params, globalAC.a_params)]

self.pull_c_params_op = [l_p.assign(g_p) for l_p, g_p in zip(self.c_params, globalAC.c_params)]

with tf.name_scope('push'):

self.update_a_op = OPT_A.apply_gradients(zip(self.a_grads, globalAC.a_params))

self.update_c_op = OPT_C.apply_gradients(zip(self.c_grads, globalAC.c_params))

def _build_net(self, scope):

w_init = tf.random_normal_initializer(0., .1)

with tf.variable_scope('actor'):

l_a = tf.layers.dense(self.s, 128, tf.nn.relu6, kernel_initializer=w_init, name='la')

mu = tf.layers.dense(l_a, N_A, tf.nn.tanh, kernel_initializer=w_init, name='mu')

sigma = tf.layers.dense(l_a, N_A, tf.nn.softplus, kernel_initializer=w_init, name='sigma')

with tf.variable_scope('critic'):

l_c = tf.layers.dense(self.s, 64, tf.nn.relu6, kernel_initializer=w_init, name='lc')

v = tf.layers.dense(l_c, 1, kernel_initializer=w_init, name='v') # state value

a_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope=scope + '/actor')

c_params = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope=scope + '/critic')

return mu, sigma, v, a_params, c_params

def update_global(self, feed_dict): # run by a local

SESS.run([self.update_a_op, self.update_c_op], feed_dict) # local grads applies to global net

def pull_global(self): # run by a local

SESS.run([self.pull_a_params_op, self.pull_c_params_op])

def choose_action(self, s): # run by a local

s = s[np.newaxis, :]

return SESS.run(self.A, {self.s: s})

class Worker(object):

def __init__(self, name, globalAC):

self.env = SeaOfEnv(N_S,N_A)

self.name = name

self.AC = ACNet(name, globalAC)

def work(self):

global GLOBAL_RUNNING_R, GLOBAL_EP

total_step = 1

a3c_r = []

buffer_s, buffer_a, buffer_r = [], [], []

while not COORD.should_stop() and GLOBAL_EP < MAX_GLOBAL_EP:

s = self.env.reset()

ep_r = 0

for ep_t in range(MAX_EP_STEP):

# if self.name == 'W_0':

# self.env.render()

# s = self.env.reset()

a = self.AC.choose_action(s)

s_, r = self.env.step(a, s)

# print(s)

done = True if ep_t == MAX_EP_STEP - 1 else False

ep_r += r / MAX_EP_STEP

s = s_

total_step += 1

buffer_s.append(s)

buffer_a.append(a)

buffer_r.append(r)

# normalize

if total_step % UPDATE_GLOBAL_ITER == 0 or done: # update global and assign to local net

if done:

v_s_ = 0 # terminal

else:

v_s_ = SESS.run(self.AC.v, {self.AC.s: s_[np.newaxis, :]})[0, 0]

buffer_v_target = []

for r in buffer_r[::-1]: # reverse buffer r

v_s_ = r + GAMMA * v_s_

buffer_v_target.append(v_s_)

buffer_v_target.reverse()

buffer_s, buffer_a, buffer_v_target = np.vstack(buffer_s), np.vstack(buffer_a), np.vstack(buffer_v_target)

feed_dict = {

self.AC.s: buffer_s,

self.AC.a_his: buffer_a,

self.AC.v_target: buffer_v_target,

}

self.AC.update_global(feed_dict)

buffer_s, buffer_a, buffer_r = [], [], []

self.AC.pull_global()

if done:

if len(GLOBAL_RUNNING_R) == 0: # record running episode reward

GLOBAL_RUNNING_R.append(ep_r)

else:

GLOBAL_RUNNING_R.append(0.9 * GLOBAL_RUNNING_R[-1] + 0.1 * ep_r)

print(

# self.name,

# "Ep:", GLOBAL_EP,

# "| Ep_r: %i" % GLOBAL_RUNNING_R[-1],

"%.2f" %GLOBAL_RUNNING_R[-1]

)

GLOBAL_EP += 1

break

if __name__ == "__main__":

SESS = tf.Session()

t1 = time.time()

with tf.device("/cpu:0"):

OPT_A = tf.train.RMSPropOptimizer(LR_A, name='RMSPropA')

OPT_C = tf.train.RMSPropOptimizer(LR_C, name='RMSPropC')

GLOBAL_AC = ACNet(GLOBAL_NET_SCOPE) # we only need its params

workers = []

# Create worker

for i in range(N_WORKERS):

i_name = 'W_%i' % i # worker name

workers.append(Worker(i_name, GLOBAL_AC))

COORD = tf.train.Coordinator()

SESS.run(tf.global_variables_initializer())

worker_threads = []

for worker in workers:

job = lambda: worker.work()

t = threading.Thread(target=job)

t.start()

worker_threads.append(t)

COORD.join(worker_threads)

print('Running time: ', time.time() - t1)

np.save('ppo_r', GLOBAL_RUNNING_R)

plt.plot(np.arange(len(GLOBAL_RUNNING_R)), GLOBAL_RUNNING_R)

plt.xlabel('step')

plt.ylabel('Total moving reward')

plt.show()

PPO

import tensorflow._api.v2.compat.v1 as tf

import numpy as np

import matplotlib.pyplot as plt

from sea_environment_ppo import SeaOfEnv

# from NB_FDMA_4 import FDMA

# from NB_TDMA_4 import TDMA

import os

import time

tf.reset_default_graph()

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

tf.disable_eager_execution()

EP_MAX = 1500

EP_LEN = 50

GAMMA = 0.9

A_LR = 0.001

C_LR = 0.008

BATCH = 64

A_UPDATE_STEPS = 10

C_UPDATE_STEPS = 10

S_DIM = 2

A_DIM = 1

# s = [e_d1, e_d2, e_d3, q_d1, q_d2, q_d3, e_r, e_d4, q_d4]

# a = [p_d1, p_d2, p_d3, alpha_d1, alpha_d2. alpha_d3, tau_t, SIC, p_d4, alpha_d4]

METHOD = [

dict(name='kl_pen', kl_target=0.01, lam=0.5),

dict(name='clip', epsilon=0.2),

][1]

class PPO(object):

def __init__(self):

self.sess = tf.Session()

self.tfs = tf.placeholder(tf.float32, [None, S_DIM], 'state')

# critic

with tf.variable_scope('critic'):

l1 = tf.layers.dense(self.tfs, 128, tf.nn.tanh)

self.v = tf.layers.dense(l1, 1)

self.tfdc_r = tf.placeholder(tf.float32, [None, 1], 'discounted_r')

self.advantage = self.tfdc_r - self.v

self.closs = tf.reduce_mean(tf.square(self.advantage))

self.ctrain_op = tf.train.AdamOptimizer(C_LR).minimize(self.closs)

# actor

pi, pi_params = self._build_anet('pi', trainable=True)

oldpi, oldpi_params = self._build_anet('oldpi', trainable=False)

with tf.variable_scope('sample_action'):

self.sample_op = tf.squeeze(pi.sample(1), axis=0)

with tf.variable_scope('update_oldpi'):

self.update_oldpi_op = [oldp.assign(p) for p, oldp in zip(pi_params, oldpi_params)]

self.tfa = tf.placeholder(tf.float32, [None, A_DIM], 'action')

self.tfadv = tf.placeholder(tf.float32, [None, 1], 'advantage')

with tf.variable_scope('loss'):

with tf.variable_scope('surrogate'):

ratio = pi.prob(self.tfa) / (oldpi.prob(self.tfa) + 1e-5)

surr = ratio * self.tfadv

if METHOD['name'] == 'kl_pen':

self.tflam = tf.placeholder(tf.float32, None, 'lambda')

kl = tf.distributions.kl_divergence(oldpi, pi)

self.kl_mean = tf.reduce_mean(kl)

self.aloss = -(tf.reduce_mean(surr - self.tflam * kl))

else:

self.aloss = -tf.reduce_mean(tf.minimum(

surr,

tf.clip_by_value(ratio, 1.-METHOD['epsilon'], 1.+METHOD['epsilon'])*self.tfadv))

with tf.variable_scope('atrain'):

self.atrain_op = tf.train.AdamOptimizer(A_LR).minimize(self.aloss)

tf.summary.FileWriter("log/", self.sess.graph)

self.sess.run(tf.global_variables_initializer())

def update(self, s, a, r):

self.sess.run(self.update_oldpi_op)

adv = self.sess.run(self.advantage, {self.tfs: s, self.tfdc_r: r})

# update actor

if METHOD['name'] == 'kl_pen':

for _ in range(A_UPDATE_STEPS):

_, kl = self.sess.run(

[self.atrain_op, self.kl_mean],

{self.tfs: s, self.tfa: a, self.tfadv: adv, self.tflam: METHOD['lam']})

if kl > 4*METHOD['kl_target']:

break

if kl < METHOD['kl_target'] / 1.5:

METHOD['lam'] /= 2

elif kl > METHOD['kl_target'] * 1.5:

METHOD['lam'] *= 2

METHOD['lam'] = np.clip(METHOD['lam'], 1e-4, 10)

else:

[self.sess.run(self.atrain_op, {self.tfs: s, self.tfa: a, self.tfadv: adv}) for _ in range(A_UPDATE_STEPS)]

# update critic

[self.sess.run(self.ctrain_op, {self.tfs: s, self.tfdc_r: r}) for _ in range(C_UPDATE_STEPS)]

def _build_anet(self, name, trainable):

with tf.variable_scope(name):

l1 = tf.layers.dense(self.tfs, 64, tf.nn.tanh, trainable=trainable)

mu = 2 * tf.layers.dense(l1, A_DIM, tf.nn.relu, trainable=trainable)

sigma = tf.layers.dense(l1, A_DIM, tf.nn.softplus, trainable=trainable)

norm_dist = tf.distributions.Normal(loc=mu, scale=sigma)

params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope=name)

return norm_dist, params

def choose_action(self, s):

s = s[np.newaxis, :]

a = self.sess.run(self.sample_op, {self.tfs: s})[0]

return np.clip(a, -0.68, 0.66)

def get_v(self, s):

if s.ndim < 2: s = s[np.newaxis, :]

return self.sess.run(self.v, {self.tfs: s})[0, 0]

env = SeaOfEnv(S_DIM,A_DIM)

# env = TDMA()

# env = FDMA()

ppo = PPO()

all_ep_r = []

throughput = []

S_Record = np.zeros((EP_MAX, EP_LEN, 2))

start = time.time()

for ep in range(EP_MAX):

s = env.reset()

buffer_s, buffer_a, buffer_r = [], [], []

ep_r = 0

for t in range(EP_LEN):

a = ppo.choose_action(s)

s_, r = env.step(a, s)

s = s_

buffer_s.append(s)

buffer_a.append(a)

# print(a)

buffer_r.append(r)

S_Record[ep][t][0] = s[0]

S_Record[ep][t][1] = s[1]

if (s_[0]>2000 or s[0]<0 or s[1]>2000 or s[1]<0 ):

break

ep_r += r

# throughput_ += B / EP_LEN

# update ppo

if (t+1) % BATCH == 0 or t == EP_LEN-1:

v_s_ = ppo.get_v(s_)

discounted_r = []

for r in buffer_r[::-1]:

v_s_ = r + GAMMA * v_s_

discounted_r.append(v_s_)

discounted_r.reverse()

bs, ba, br = np.vstack(buffer_s), np.vstack(buffer_a), np.array(discounted_r)[:, np.newaxis]

buffer_s, buffer_a, buffer_r = [], [], []

ppo.update(bs, ba, br)

# if ep == 0: all_ep_r.append(ep_r)

# else: all_ep_r.append(all_ep_r[-1]*0.9 + ep_r*0.1)

all_ep_r.append(ep_r/EP_LEN)

np.save('ppo_r', all_ep_r)

np.save('S_record_REAL', S_Record)

print(

'Ep: %i' % ep,

#"|Ep_r: %.2f" % ep_r,

"PF: %.2f" % (ep_r/50),

#("|Lam: %.4f" % METHOD['lam']) if METHOD['name'] == 'kl_pen' else '',

)

end = time.time()

print("PPO循环运行时间:%.2f秒"%(end-start))

print("maxPF: %.2f" %max(all_ep_r))

# print("maxTP: %.2f" %max(throughput))

plt.plot(np.arange(len(all_ep_r)), all_ep_r)

plt.xlabel('Episode')

plt.ylabel('Moving averaged proportional fairness')

plt.show()

DDPG

import tensorflow._api.v2.compat.v1 as tf

import numpy as np

from scipy import io

import time

from NOMA_V1 import NOMA1

import matplotlib.pyplot as plt

tf.disable_eager_execution()

np.random.seed(1)

tf.set_random_seed(1)

##################### hyper parameters ####################

MAX_EPISODES = 400

MAX_EP_STEPS = 200

LR_A = 0.0002 # learning rate for actor

LR_C = 0.0008 # learning rate for critic

GAMMA = 0.9 # reward discount

REPLACEMENT = [

dict(name='soft', tau=0.01),

dict(name='hard', rep_iter_a=600, rep_iter_c=500)

][1] # you can try different target replacement strategies

MEMORY_CAPACITY = 10000

BATCH_SIZE = 64

RENDER = False

OUTPUT_GRAPH = True

ENV_NAME = 'Pendulum-v0'

############################### Actor ####################################

class Actor(object):

def __init__(self, sess, action_dim, action_bound, learning_rate, replacement):

self.sess = sess

self.a_dim = action_dim

self.action_bound = action_bound

self.lr = learning_rate

self.replacement = replacement

self.t_replace_counter = 0

with tf.variable_scope('Actor'):

# input s, output a

self.a = self._build_net(S, scope='eval_net', trainable=True)

# input s_, output a, get a_ for critic

self.a_ = self._build_net(S_, scope='target_net', trainable=False)

self.e_params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope='Actor/eval_net')

self.t_params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope='Actor/target_net')

if self.replacement['name'] == 'hard':

self.t_replace_counter = 0

self.hard_replace = [tf.assign(t, e) for t, e in zip(self.t_params, self.e_params)]

else:

self.soft_replace = [tf.assign(t, (1 - self.replacement['tau']) * t + self.replacement['tau'] * e)

for t, e in zip(self.t_params, self.e_params)]

def _build_net(self, s, scope, trainable):

with tf.variable_scope(scope):

init_w = tf.random_normal_initializer(0., 0.3)

init_b = tf.constant_initializer(0.1)

net = tf.layers.dense(s, 30, activation=tf.nn.relu,

kernel_initializer=init_w, bias_initializer=init_b, name='l1',

trainable=trainable)

with tf.variable_scope('a'):

actions = tf.layers.dense(net, self.a_dim, activation=tf.nn.tanh, kernel_initializer=init_w,

bias_initializer=init_b, name='a', trainable=trainable)

scaled_a = tf.multiply(actions, self.action_bound,

name='scaled_a') # Scale output to -action_bound to action_bound

return scaled_a

def learn(self, s): # batch update

self.sess.run(self.train_op, feed_dict={S: s})

if self.replacement['name'] == 'soft':

self.sess.run(self.soft_replace)

else:

if self.t_replace_counter % self.replacement['rep_iter_a'] == 0:

self.sess.run(self.hard_replace)

self.t_replace_counter += 1

def choose_action(self, s):

s = s[np.newaxis, :] # single state

return self.sess.run(self.a, feed_dict={S: s})[0] # single action

def add_grad_to_graph(self, a_grads):

with tf.variable_scope('policy_grads'):

# ys = policy;

# xs = policy's parameters;

# a_grads = the gradients of the policy to get more Q

# tf.gradients will calculate dys/dxs with a initial gradients for ys, so this is dq/da * da/dparams

self.policy_grads = tf.gradients(ys=self.a, xs=self.e_params, grad_ys=a_grads)

with tf.variable_scope('A_train'):

opt = tf.train.AdamOptimizer(-self.lr) # (- learning rate) for ascent policy

self.train_op = opt.apply_gradients(zip(self.policy_grads, self.e_params))

############################### Critic ####################################

class Critic(object):

def __init__(self, sess, state_dim, action_dim, learning_rate, gamma, replacement, a, a_):

self.sess = sess

self.s_dim = state_dim

self.a_dim = action_dim

self.lr = learning_rate

self.gamma = gamma

self.replacement = replacement

with tf.variable_scope('Critic'):

# Input (s, a), output q

self.a = tf.stop_gradient(a) # stop critic update flows to actor

self.q = self._build_net(S, self.a, 'eval_net', trainable=True)

# Input (s_, a_), output q_ for q_target

self.q_ = self._build_net(S_, a_, 'target_net',

trainable=False) # target_q is based on a_ from Actor's target_net

self.e_params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope='Critic/eval_net')

self.t_params = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope='Critic/target_net')

with tf.variable_scope('target_q'):

self.target_q = R + self.gamma * self.q_

with tf.variable_scope('TD_error'):

self.loss = tf.reduce_mean(tf.squared_difference(self.target_q, self.q))

with tf.variable_scope('C_train'):

self.train_op = tf.train.AdamOptimizer(self.lr).minimize(self.loss)

with tf.variable_scope('a_grad'):

self.a_grads = tf.gradients(self.q, self.a)[0] # tensor of gradients of each sample (None, a_dim)

if self.replacement['name'] == 'hard':

self.t_replace_counter = 0

self.hard_replacement = [tf.assign(t, e) for t, e in zip(self.t_params, self.e_params)]

else:

self.soft_replacement = [tf.assign(t, (1 - self.replacement['tau']) * t + self.replacement['tau'] * e)

for t, e in zip(self.t_params, self.e_params)]

def _build_net(self, s, a, scope, trainable):

with tf.variable_scope(scope):

init_w = tf.random_normal_initializer(0., 0.1)

init_b = tf.constant_initializer(0.1)

with tf.variable_scope('l1'):

n_l1 = 30

w1_s = tf.get_variable('w1_s', [self.s_dim, n_l1], initializer=init_w, trainable=trainable)

w1_a = tf.get_variable('w1_a', [self.a_dim, n_l1], initializer=init_w, trainable=trainable)

b1 = tf.get_variable('b1', [1, n_l1], initializer=init_b, trainable=trainable)

net = tf.nn.relu(tf.matmul(s, w1_s) + tf.matmul(a, w1_a) + b1)

with tf.variable_scope('q'):

q = tf.layers.dense(net, 1, kernel_initializer=init_w, bias_initializer=init_b,

trainable=trainable) # Q(s,a)

return q

def learn(self, s, a, r, s_):

self.sess.run(self.train_op, feed_dict={S: s, self.a: a, R: r, S_: s_})

if self.replacement['name'] == 'soft':

self.sess.run(self.soft_replacement)

else:

if self.t_replace_counter % self.replacement['rep_iter_c'] == 0:

self.sess.run(self.hard_replacement)

self.t_replace_counter += 1

##################### Memory ####################

class Memory(object):

def __init__(self, capacity, dims):

self.capacity = capacity

self.data = np.zeros((capacity, dims))

self.pointer = 0

def store_transition(self, s, a, r, s_):

transition = np.hstack((s, a, [r], s_))

index = self.pointer % self.capacity # replace the old memory with new memory

self.data[index, :] = transition

self.pointer += 1

def sample(self, n):

assert self.pointer >= self.capacity, 'Memory has not been fulfilled'

indices = np.random.choice(self.capacity, size=n)

return self.data[indices, :]

env = NOMA1()

state_dim = 3

action_dim = 7

action_bound = [2, 2, 2,2,2,2,2]

# all placeholder for tf

with tf.name_scope('S'):

S = tf.placeholder(tf.float32, shape=[None, state_dim], name='s')

with tf.name_scope('R'):

R = tf.placeholder(tf.float32, [None, 1], name='r')

with tf.name_scope('S_'):

S_ = tf.placeholder(tf.float32, shape=[None, state_dim], name='s_')

sess = tf.Session()

# Create actor and critic.

# They are actually connected to each other, details can be seen in tensorboard or in this picture:

actor = Actor(sess, action_dim, action_bound, LR_A, REPLACEMENT)

critic = Critic(sess, state_dim, action_dim, LR_C, GAMMA, REPLACEMENT, actor.a, actor.a_)

actor.add_grad_to_graph(critic.a_grads)

sess.run(tf.global_variables_initializer())

M = Memory(MEMORY_CAPACITY, dims=2 * state_dim + action_dim + 1)

# if OUTPUT_GRAPH:

# tf.summary.FileWriter("logs/", sess.graph)

var = 3 # control exploration

all_ep_r = []

t1 = time.time()

for i in range(MAX_EPISODES):

s = env.reset()

ep_reward = 0

for j in range(MAX_EP_STEPS):

s = env.reset()

# Add exploration noise

a = actor.choose_action(s)

a = np.clip(np.random.normal(a, var), -2, 2) # add randomness to action selection for exploration

s_, r, rate1, rate2, rate3, sec1, sec2, sec3 = env.step(a, s)

M.store_transition(s, a, r / 10, s_)

if M.pointer > MEMORY_CAPACITY:

var *= .9995 # decay the action randomness

b_M = M.sample(BATCH_SIZE)

b_s = b_M[:, :state_dim]

b_a = b_M[:, state_dim: state_dim + action_dim]

b_r = b_M[:, -state_dim - 1: -state_dim]

b_s_ = b_M[:, -state_dim:]

critic.learn(b_s, b_a, b_r, b_s_)

actor.learn(b_s)

s = s_

ep_reward += r / MAX_EP_STEPS

if j == MAX_EP_STEPS - 1:

# print('Episode:', i, ' Reward: %i' % int(ep_reward), 'Explore: %.2f' % var, )

print("%.2f" % ep_reward)

if ep_reward > -300:

RENDER = True

break

if i == 0:

all_ep_r.append(ep_reward)

else:

all_ep_r.append(all_ep_r[-1] * 0.9 + ep_reward * 0.1)

print(a)

print('辅助用户传输速率为:%0.2f ,%0.2f ,%0.2f ' %(rate1, rate2, rate3))

print('传输用户安全速率为:%0.2f ,%0.2f ,%0.2f ' %(sec1, sec2, sec3))

print('Running time: ', time.time() - t1)

plt.plot(np.arange(len(all_ep_r)), all_ep_r)

plt.xlabel('Episode')

plt.ylabel('Moving averaged proportional fairness')

plt.show()

io.savemat('./matfile/dpg3.mat', {"reword": all_ep_r})

Lingo

LINGO全称是Linear Interactive and General Optimizer的缩写—交互式的线性和通用优化求解器。它是一套设计用来帮助您快速,方便和有效的构建和求解线性,非线性,和整数最优化模型的功能全面的工具。包括功能强大的建模语言,建立和编辑问题的全功能环境,读取和写入Excel和数据库的功能,和一系列完全内置的求解程序.

Lingo 是使建立和求解线性、非线性和整数最佳化模型更快更简单更有效率的综合工具。Lingo 提供强大的语言和快速的求解引擎来阐述和求解最佳化模型。

Lingo求解TSP问题

matlab与lingo对比

这个没法比较,因为两者的定位不同.就像我们无法比较word和excel那个好用一样.一般是按需选择,交叉使用的关系.lingo是专门处理优化问题的软件,比matlab自带的优化工具箱强大,但功能单一,无法处理别的问题.matlab是综合类的工程数学软件,利用其语言和自带命令可以方便地编写处理各种问题的程序包,自带的工具箱涵盖的范围也很丰富.两者的关系可以说一个是小而精,一个是大而全.

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)